| Brand | NVIDIA |

|---|---|

| Application | Server |

| Products Status | New |

| Interface | PCIe 4.0 x16 |

| BusWidth | 512-bit |

| Cores | 6912 |

| Memory Type | HBM2E |

| NVIDIA GPU | GA100 |

| Boost Clock | 1410 MHz |

| TDP | 400W |

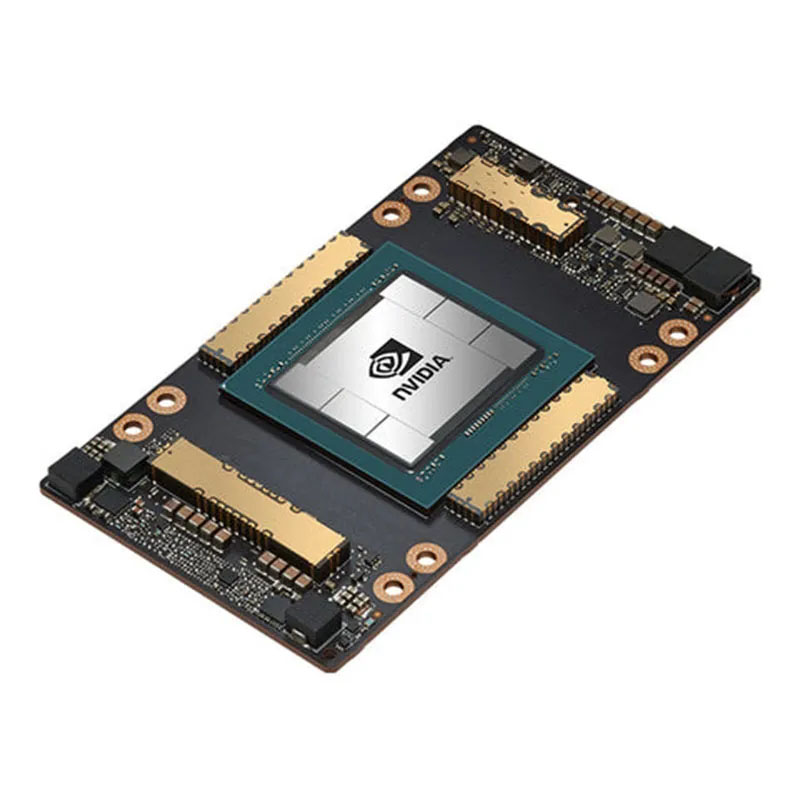

NVIDIA A100 80G SXM4

The NVIDIA A100 80GB SXM4 is a flagship data center GPU designed for AI, machine learning, and high-performance computing workloads. Built on the Ampere architecture with 54 billion transistors, it delivers exceptional performance with 624 tensor TFLOPS for AI training and 1,248 TFLOPS for inference. The 80GB high-bandwidth memory (HBM2e) with 2 TB/s memory bandwidth enables processing of massive datasets and complex models without memory constraints. Key features include Multi-Instance GPU (MIG) technology that partitions the GPU into up to seven isolated instances for optimal resource utilization, third-generation NVLink for ultra-fast GPU-to-GPU communication, and advanced sparsity support for accelerated inference. The SXM4 form factor provides superior power efficiency and thermal management in server environments. This GPU accelerates breakthrough research, reduces training times from weeks to hours, and enables real-time AI inference for mission-critical applications across industries including healthcare, finance, and autonomous vehicles.

Quote1 Piece(MOQ) Minimum Order Quantity

Bulk Order Discounts Available

| Brand | NVIDIA |

|---|---|

| Application | Server |

| Products Status | New |

| Interface | PCIe 4.0 x16 |

| BusWidth | 512-bit |

Inquiry Now

Contact us for more discounts!